You've built your WordPress site, published great content, and now you're waiting for visitors to arrive from Google. But here's the thing: Google won't magically know your site exists. It needs to discover, crawl, and index your pages first.

Understanding how Google crawls WordPress websites isn't just technical SEO jargon. It's the difference between your content sitting invisible in the digital void and actually showing up when people search for what you offer.

What Is Google Crawling and Why It Matters

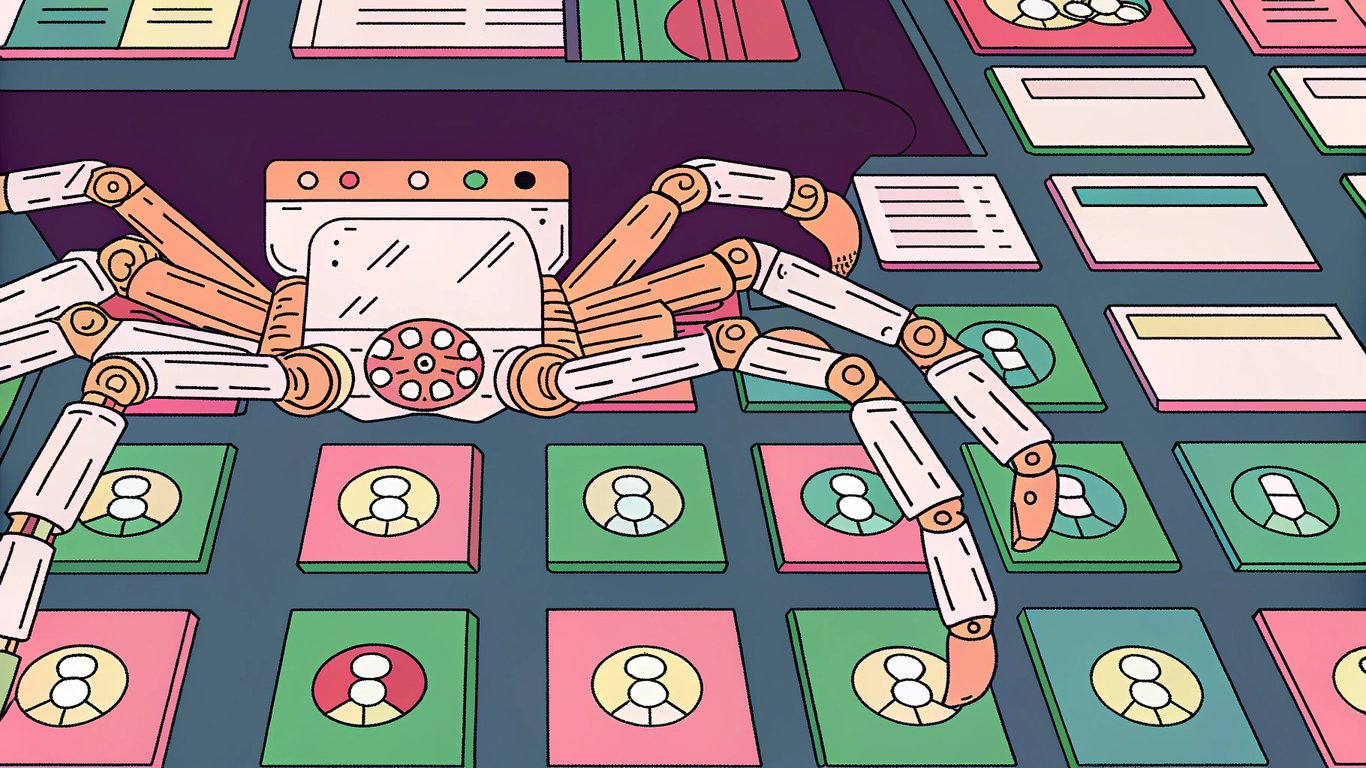

Crawling is how Google discovers and reads your website content. Think of it like a librarian cataloging books. Google sends out automated programs called web crawlers (or spiders) that visit web pages, read their content, and follow links to find more pages.

Without crawling, your pages can't get indexed. And without indexing, they won't appear in search results. It's that simple.

The crawler Google uses is called Googlebot. It visits billions of web pages constantly, looking for new content and updates to existing pages. When Googlebot finds your WordPress site, it analyzes the HTML, CSS, JavaScript, and all the content you've published.

How WordPress Sites Differ from Other Platforms

WordPress powers over 40% of all websites, but it has some unique characteristics that affect crawling. The platform generates dynamic URLs, creates multiple versions of content (like category and tag archives), and relies heavily on plugins that can either help or hurt your crawl efficiency.

WordPress also automatically generates certain pages that you might not want Google to crawl, like author archives or date-based archives. These can waste your crawl budget if you're not careful about managing them.

The Crawling, Indexing, and Ranking Process

Here's the journey your WordPress content takes:

- Discovery: Google finds your page through links, sitemaps, or direct submission

- Crawling: Googlebot visits and reads your page content

- Processing: Google analyzes and stores the information in its massive database

- Indexing: Your page gets added to Google's search index

- Ranking: Google determines where your page should appear for relevant searches

Each step matters, but crawling is where everything starts. If Google can't crawl your site properly, the rest doesn't happen.

How Google Discovers and Crawls Your WordPress Website

How Googlebot Finds Your WordPress Site

Google discovers your WordPress site through several methods. The most common is following links from other websites. When someone links to your site, Googlebot follows that link during its regular crawling activities.

You can also submit your site directly through Google Search Console. This tells Google your site exists and provides a sitemap that lists all your important pages.

Internal linking plays a huge role too. When Googlebot lands on your homepage, it follows links to discover your other pages. If a page has no internal links pointing to it, Google might never find it.

Understanding Crawl Budget and Frequency

Google doesn't crawl every page on your site every day. It allocates a crawl budget based on your site's authority, update frequency, and server capacity.

High-authority sites with fresh content get crawled more frequently. A small blog might get crawled every few days, while major news sites get crawled every few minutes. Your WordPress site's crawl frequency depends on how often you publish new content and how many quality backlinks you have.

For most WordPress sites with under 10,000 pages, crawl budget isn't a major concern. But if you're running a large site, you'll want to make sure Google spends its crawl budget on your important pages, not duplicate content or low-value archives.

The Role of XML Sitemaps in WordPress

An XML sitemap is basically a roadmap of your website. It lists all the pages you want Google to crawl and provides metadata about each page, like when it was last updated and how important it is relative to other pages.

WordPress has built-in sitemap functionality since version 5.5. You can find your sitemap at yoursite.com/wp-sitemap.xml. Many SEO plugins like Yoast SEO and Rank Math offer more advanced sitemap features with better control over what gets included.

How Google Reads Your WordPress Content

Googlebot reads your WordPress site much like a web browser does. It processes HTML to understand your content structure, follows CSS to see how content is styled, and executes JavaScript to render dynamic elements.

WordPress-specific elements like shortcodes, widgets, and custom post types all get crawled. But Google primarily focuses on your actual content: the text, images, videos, and links on your pages.

One thing to watch: if your WordPress theme relies heavily on JavaScript to load content, Google might have trouble seeing it. Most modern themes handle this fine, but it's worth checking in Google Search Console's URL Inspection tool to see what Google actually sees.

Setting Up Your WordPress Site for Optimal Crawling

Step 1: Check Your WordPress Visibility Settings

This is the most common mistake WordPress users make. There's a setting buried in your WordPress dashboard that can block search engines entirely.

Go to Settings > Reading in your WordPress admin panel. Look for the checkbox that says "Discourage search engines from indexing this site." Make absolutely sure this is unchecked. If it's checked, you're telling Google not to crawl your site.

This setting is often enabled by default on staging sites or during development, and people forget to turn it off when they launch.

Step 2: Set Up Google Search Console

Google Search Console is your direct line of communication with Google about your site's crawling and indexing status. Setting it up takes about 10 minutes.

- Go to Google Search Console and sign in with your Google account

- Click "Add Property" and enter your website URL

- Choose a verification method (HTML file upload, DNS record, or HTML tag)

- Complete the verification process

- Wait for Google to confirm ownership

Many WordPress SEO plugins can handle verification automatically. If you're using Yoast SEO or Rank Math, they'll walk you through the process.

Step 3: Submit Your XML Sitemap to Google

Once you've verified your site in Search Console, submit your sitemap. This helps Google discover all your pages faster.

In Google Search Console, go to Sitemaps in the left sidebar. Enter your sitemap URL (usually yoursite.com/wp-sitemap.xml or yoursite.com/sitemap_index.xml if you're using an SEO plugin) and click Submit.

Google will start processing your sitemap within a few hours, though it might take days or weeks to actually crawl all the URLs listed.

Step 4: Configure Your Robots.txt File

The robots.txt file tells search engines which parts of your site they should and shouldn't crawl. WordPress creates a virtual robots.txt file by default, but you can customize it.

You can view your current robots.txt file by visiting yoursite.com/robots.txt. A basic WordPress robots.txt might look like this:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://yoursite.com/wp-sitemap.xml

Most WordPress sites don't need a complex robots.txt file. The default setup works fine for typical blogs and business sites.

Step 5: Verify Googlebot Can Access Your Site

Use the URL Inspection tool in Google Search Console to test if Google can access your pages. Enter any URL from your site, and Google will show you exactly what Googlebot sees when it crawls that page.

Look for any errors or warnings. The tool will tell you if there are crawl issues, indexing problems, or if the page is blocked by robots.txt.

Common WordPress Issues That Block Google Crawling

WordPress Settings That Block Crawlers

Beyond the visibility setting we mentioned earlier, WordPress and SEO plugins can add noindex tags to specific pages. These tags tell Google not to index certain content.

Check your SEO plugin settings. Some plugins automatically noindex category pages, tag pages, or author archives. This might be intentional, but make sure you're not accidentally blocking pages you want indexed.

Plugin Conflicts and Performance Issues

Too many plugins can slow down your site, which affects crawling. If your pages take forever to load, Googlebot might give up before fully crawling them.

Security plugins sometimes block Googlebot by mistake, treating it like a malicious bot. Check your security plugin's settings and make sure Googlebot isn't being blocked.

Server and Hosting Problems

Cheap shared hosting can cause crawl issues. If your server is slow or frequently times out, Google will crawl your site less often to avoid overloading it.

Server errors (500 errors) tell Google something's wrong with your site. Too many of these, and Google might reduce how often it crawls your pages.

Duplicate Content and Canonical Issues

WordPress can create duplicate content without you realizing it. The same post might be accessible through multiple URLs: the main post URL, category archives, tag archives, and date archives.

Canonical tags solve this problem by telling Google which version is the "main" one. Most SEO plugins handle this automatically, but it's worth checking that your canonical tags are set up correctly.

Broken Links and 404 Errors

Broken links waste crawl budget and create a poor user experience. When Googlebot encounters too many 404 errors, it might assume your site isn't well-maintained.

Use Google Search Console's Coverage report to find 404 errors. Fix important ones by either restoring the content or setting up 301 redirects to relevant pages.

How to Request Google to Recrawl Your WordPress URLs

When You Should Request a Recrawl

You don't need to request a recrawl every time you publish new content. Google will find it naturally through your sitemap and internal links.

But there are times when requesting a recrawl makes sense:

- You've fixed a major error on an important page

- You've updated outdated content with fresh information

- You've removed a noindex tag from a page you want indexed

- You've launched a new product or service page that needs quick indexing

Using the URL Inspection Tool for Individual Pages

In Google Search Console, use the URL Inspection tool at the top of the page. Enter the URL you want recrawled, wait for Google to fetch the current version, then click "Request Indexing."

Google limits how many indexing requests you can make, so use this feature strategically. Don't spam it with every minor update.

Resubmitting Your Sitemap After Major Updates

If you've made significant changes to your site structure or published a bunch of new content, resubmit your sitemap in Google Search Console. This signals to Google that there's fresh content to crawl.

You don't need to do this often. Google checks your sitemap regularly on its own.

Expected Timeframes for Crawling and Indexing

Here's the reality: it's not instant. Minor changes might show up in search results within a day or two. Bigger structural updates can take weeks.

High-authority sites get crawled faster, while smaller or newer sites might wait longer. There's no guaranteed timeline, which can be frustrating when you're eager to see results.

Optimizing Your WordPress Site for Faster and Better Crawling

Improving Site Speed and Performance

Page speed directly affects how efficiently Google can crawl your site. Faster sites get crawled more thoroughly because Googlebot can visit more pages in the same amount of time.

Use caching plugins, optimize your images, and choose quality hosting. Tools like Google PageSpeed Insights will show you specific areas to improve.

Creating a Logical Site Structure

Organize your content with clear categories and a logical hierarchy. Every page should be reachable within three clicks from your homepage.

Internal linking helps Googlebot discover your content and understand which pages are most important. Link to your best content from multiple places on your site.

Using WordPress SEO Plugins Effectively

SEO plugins like Yoast SEO and Rank Math handle many crawl optimization tasks automatically. They generate sitemaps, add canonical tags, and let you control which pages get indexed.

Don't install multiple SEO plugins. Pick one and configure it properly. Having two SEO plugins can create conflicts and confuse Google.

Implementing Structured Data and Schema Markup

Structured data helps Google understand your content better. It won't directly improve crawling, but it can lead to rich results in search, which drives more traffic.

Many WordPress themes and plugins add basic schema markup automatically. You can add more specific markup for recipes, reviews, products, and other content types using plugins or custom code.

Mobile-First Crawling Considerations

Google primarily uses the mobile version of your site for crawling and indexing. Make sure your WordPress theme is responsive and works well on mobile devices.

Test your site on actual mobile devices, not just desktop browser simulators. Sometimes things that look fine in responsive mode have issues on real phones.

Managing Crawl Budget for Large WordPress Sites

If you're running a large WordPress site with thousands of pages, crawl budget becomes important. You want Google spending its time on your valuable content, not low-quality archive pages.

Use robots.txt to block unnecessary sections. Noindex pages that don't need to rank. Consolidate thin content. Every page Google crawls should provide real value.

Monitoring and Maintaining Healthy Crawl Status

Reading Google Search Console Crawl Reports

Google Search Console provides detailed reports about how Google crawls your site. The Coverage report shows which pages are indexed, which have errors, and which are excluded.

Check these reports monthly. Look for sudden spikes in errors or drops in indexed pages. These often indicate problems that need fixing.

Setting Up Crawl Monitoring Alerts

Google Search Console can email you when it detects critical issues like server errors or security problems. Enable these notifications so you can respond quickly to crawl issues.

Regular Maintenance Tasks for WordPress SEO

Set up a maintenance schedule:

- Weekly: Check for broken links and fix critical errors

- Monthly: Review Search Console reports and update outdated content

- Quarterly: Audit your site structure and clean up unnecessary pages

Regular maintenance prevents small issues from becoming major crawl problems.

Staying Updated with Google's Algorithm Changes

Google's crawling technology evolves constantly. In 2026, AI-driven indexing and understanding of content continues to improve. Google gets better at understanding context, user intent, and content quality.

Follow the Google Search Central Blog for official updates. When Google announces changes to how it crawls or indexes content, adjust your WordPress site accordingly.

Taking Control of How Google Crawls Your WordPress Site

Getting Google to crawl your WordPress site effectively isn't complicated, but it does require attention to detail. Most crawl issues come from simple configuration mistakes that take minutes to fix.

Quick Action Checklist

Here's what you should do right now:

- Verify your WordPress visibility settings aren't blocking search engines

- Set up Google Search Console if you haven't already

- Submit your XML sitemap to Google

- Check the URL Inspection tool to confirm Google can access your pages

- Review your robots.txt file for any accidental blocks

- Test your site speed and fix major performance issues

These steps will handle probably 90% of crawl issues on typical WordPress sites. If pages still aren't appearing in search results after crawling, see our guide on fixing indexing issues.

Additional Resources and Tools

Keep these resources bookmarked:

- Google Search Console for monitoring crawl status

- Yoast SEO or Rank Math for WordPress SEO management

- Google PageSpeed Insights for performance testing

- Google Search Documentation for technical guidance

Understanding how Google crawls WordPress websites gives you control over your search visibility. This is a foundational technical SEO skill that every site owner should master. For sites using AI autoblogging, proper crawl optimization ensures your automatically generated content gets discovered and indexed just as quickly as manually written posts. Fix the basics, monitor your crawl status regularly, and focus on creating content worth crawling in the first place.